Since the launch of its AlphaGo program in 2016, DeepMind earned a reputation as one of the leading AI startups.

Its subsequent acquisition by Google has only helped the company to further enhance its reputation.

This partnership has benefitted Google in a number of ways.

For example, it is DeepMind developed algorithms that power the company’s Adaptive Battery management and Adaptive Brightness systems.

For DeepMind, working with Google has allowed them the opportunity to focus on developing AI systems with real-world applications.

If you’ve never heard of this trailblazing startup, or maybe want to learn more about them, this article is for you.

After exploring the history of the company we will explore in-depth some of their more exciting projects and initiatives.

Table of Contents

The Story Behind Deepmind

The startup, the brainchild of Demis Hassabis began life in London in 2010.

Hassabis, a teenage chess master and computer science graduate, has also completed a Ph.D. in cognitive neuroscience.

While studying for his PhD Hassabis met Shane Legg, a research associate at University College London’s Gatsby Computational Neuroscience Unit.

Along with Mustafa Suleyman, a childhood friend who established the Muslim youth Helpline counselling service, they founded Deepmind.

Their aim, according to Demis Hassabis in a Guardian interview, is to find a “meta-solution to any problem”.

To put it another way, DeepMind is aiming to replicate the way that the human brain works.

Their systems are developed to be cognitive, capable of understanding and undertaking a wide range of tasks.

Initially DeepMind Focused on Gaming Applications

Their first system learned how to play 49 different Atari console games.

Deep reinforcement learning trained the system to read on-screen scores and pixels.

From here DeepMind developed a series of neural networks and deep learning models that eventually became AlphaGo.

AlphaGo is a system that teaches itself how to play the game Go.

By studying past games, positions, and moves the system learned 3,000 years worth of information in just 40 days.

This information, once processed, allows the system to make its own strategies.

AlphaGo’s power lies in its ability to learn and improve on previous decisions.

As Hassabis observed this means the system is not “constrained by the confines of human knowledge.”

In 2016 AlphaGo defeated Fan Hui, a professional Go player.

The following year, in 2017, AlhpaGo defeated Kie Joe, then the number one ranked Go player in the world.

MORE – DeepMind Set to Roll out its First Commercial AI Product

MORE – Will Google AI Ever Rule the World?

DeepMind’s Success Attracted the Attention of Google

In January 2014 Google spent £400 million purchasing Deepmind.

At the time this was Google’s largest European purchase.

This investment allowed Deepmind to make great strides towards its ultimate aim, creating intelligence that can solve any and every problem.

Acquiring Deepmind has enabled Google to make a number of significant AI-related developments.

Deepmind’s applications power Google’s image recognition software, and Google image search. The latter application, installed on Google+ is able to search through thousands of unlabelled images with just a simple keyword prompt.

As DeepMind continues to evolve and develop it is having increasingly important implications in real-world scenarios.

Deepmind’s Relationship with Google

Since their acquisition, a number of DeepMind’s developments have been used to enhance Google’s infrastructure.

Their work has affected, positively, the products and services Google provides to millions of people all over the world.

Data Centre Efficiency Improvements

Since 2016 DeepMind has been developing an AI-driven recommendation system.

This system is currently in use, improving energy efficiency levels in Google’s many data centres.

DeepMind approached this challenge with the belief that even a minor change can make a significant reduction in energy usage.

Even a small reduction in energy usage will reduce harmful CO2 emissions.

This, in turn, helps to combat climate change.

Currently, their machine learning driven systems are being used to control data center cooling.

While the system has direct control, expert supervision is always at hand to monitor any decisions that are made.

By placing the system on a cloud, it can make energy-saving decisions in Google data centers around the world.

How Deep Learning Manages Cooling Systems

Each data centre contains thousands of servers, powering numerous popular services such as Gmail, YouTube, and Google’s search feature.

The company needs these servers to run as efficiently and as reliably as possible.

This reliability and the safety of the servers is key and cannot be compromised.

Any energy-saving solution devised by DeepMind had to respect this.

In a paper published by Google, data engineer Jom Gao, explained how the system works.

The system DeepMind developed uses smart sensors to take a snapshot of the data centre cooling system.

A snapshot is taken every 5 minutes.

The information gathered by these snapshots is then fed into deep neural networks.

These networks predict numerous different potential scenarios and how combinations of actions will affect future energy consumption.

The system analyses all these outcomes, running them past a strict set of safety constraints, before selecting the most energy-efficient option.

The selected action or actions are then sent back to the data centre.

Here they are verified by the local control system before being implemented.

MORE – Computer Vision Applications in 10 Industries

These Systems Will Improve Over Time

DeepMind’s energy-saving solutions have already been significantly improved.

Initially, operators had to manually vet each suggestion before the action was implemented.

Thanks to deep learning and system optimisation, the systems now operate in a narrower regime.

This means that it independently operates both reliably and safely without the need for regular human interaction.

Within a few months, the DeepMind developed system was delivering a consistent energy saving of 30%, on average.

As the system continues to run and is further refined, these results will continue to improve.

The optimisation will also lead to boundaries being expanded.

This again will allow for greater energy savings to be made.

Dan Fuenffinger, an operator at one of Google’s data centres, has worked alongside the system.

He observed that it was “amazing to see the AI learn to take advantage of winter conditions and produce colder than normal water, which reduces the energy required for cooling within the data centre.”

This ability to respond to conditions and climatic changes allows the system to become efficient.

As Feunffinger also remarked, “Rules don’t get better over time, but AI does.”

While this system is currently only applied in Google’s data centres there is potential to scale it for use in other industry settings.

This will allow energy consumption to become more efficient.

As waste is reduced, these systems will make a significant contribution to counteracting the effects of climate change.

Wind Farm Efficiency

It is not just in data centres that DeepMind has affected Google’s energy consumption.

DeepMind developed systems are also helping to increase the value of energy produced by Google’s central American wind farms.

DeepMind’s AI-driven systems predict the output of a wind farm up to 36 hours before it generates any energy.

This system has been developed by feeding neural networks masses of historical turbine data and local weather forecasts.

This training has enabled the system to provide an optimal hourly delivery prediction.

This information is given to the corresponding power grid, meaning that energy produced by the wind farm can be utilized.

This lessens the reliance on fossil fuels for energy production.

DeepMind believes that the initial application of this system has increased the worth of wind energy by about 20%.

As the system is further refined and improved this will increase.

It also helps to make this unpredictable energy source a more commercially viable, and reliable option.

DeepMind and Google believe that this application of machine learning will strengthen the case for further adoption of wind power.

If wind power can become a more reliable power source, our reliance on carbon or fossil fuels will decrease.

DeepMind Drives Improvements in Audio Analysis

As we will see later in the article, many DeepMind developments have focused on the optimisation of healthcare provision.

However, DeepMinds machine learning also has a number of other applications.

One of which is audio analysis.

Voice generated AI assistants, such as Siri or Alexa, are becoming increasingly popular.

Despite the increase in their usage, the gap between computer and human speech is still large.

With the aim of closing this gap, DeepMind has developed a text to speech system with a difference.

WaveNet is driven by a neural network that seeks to replicate the sound waves produced by human speech.

This sets it apart from similar applications, which simply copy the language that humans use.

WaveNet Speaks for Google

Initially a prototype, WaveNet took about a second to generate 0.02 seconds of audio.

These initial systems were slow and too complicated for consumer usage.

However further developments, in hand with Google text to Speech programs, has allowed DeepMind’s research teams to refine the model.

An improved version is currently 1000 times faster than the prototype.

As a result, WaveNet is used to generate voices in Google Assistant.

Similarly, Google Cloud platform users also enjoy WaveNet generated voices in the applications Text-to-Speech program.

DeepMind Applications in the Healthcare Industry

Like many other AI-focused operations DeepMind is looking to make improvements in the healthcare industry.

Dr Dominic King, health lead for Google DeepMind believes that AI-driven predictive medicine applications will allow us to manage conditions before they develop.

King and DeepMind believe that using algorithms in this way can transform how clinical healthcare plans are developed and implemented.

King told the Digital Healthcare Show that “medical imaging is where we are at the forefront.”

He also revealed his excitement at “the potential of using AI to look at a medical record and electronic health record data to make predictions”.

These predictions flag up which patients are more likely to develop conditions such as sepsis or need ICU care.

To develop this application DeepMind has partnered with the US Department of Veteran Affairs.

This partnership has allowed DeepMind to access data from veterans health records.

With this access DeepMind has developed a system that analyses and reads medical history, flagging up any future potential problems.

This application has been successfully used to identify which patients may develop serious kidney problems.

READ MORE – Top 10 Ways Artificial Intelligence is Impacting Healthcare

AI Assistants for Healthcare Professionals

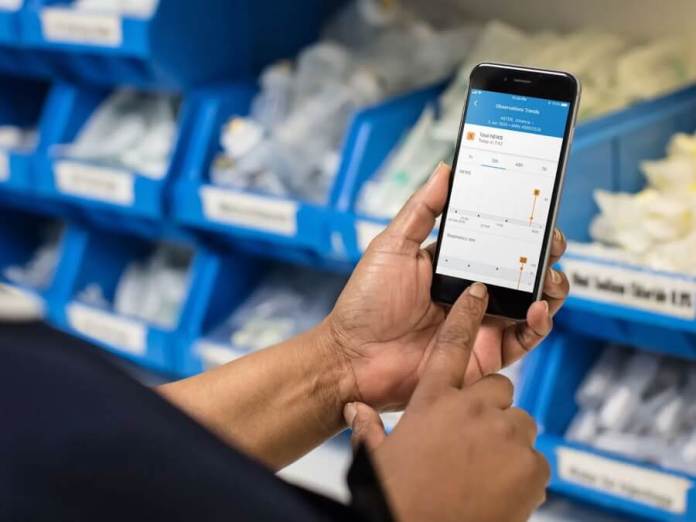

DeepMind’s Streams mobile app has already been implemented at a number of NHS hospitals and trusts.

The Imperial College Healthcare trust was one of the first to adopt the technology.

Healthcare providers at St Mary’s Hospital were able to download the app onto their mobile devices.

This gives them secure access to patient records and test results.

The trust and DeepMind believe that by providing healthcare professionals with real-time access to test results, clinicians will be able to make faster decisions.

This speeds up treatment times, enabling care to begin before a patient’s condition can deteriorate.

It is also hoped that by saving time, more focus will be placed on patient care.

Dr Sanjay Gautama, Imperial’s CCIO said of the initiative, “As one of the NHS’s global digital exemplars, we are proud to be leading the way in using advances in digital technology to make tangible improvements to the care of our patients.”

Streams gives nurses and doctors real-time access to tests and scan results.

This can speed up treatment times.

However, the patient safety alert feature of the app aims to make patients safer.

This is done by alerting staff to test results that suggest urgent assessment or intervention is required.

Since first launching in 2017 DeepMind Health has rolled streams out to a number of NHS trusts in the UK.

Nurses have reported that regular use of the app can save them 2 hours a day.

READ MORE – Deepmind’S AI Main Competitor Sensyne Health Launches IPO

READ MORE – Artificial Intelligence in Medicine – Top 10 Applications

Patient Privacy Concerns

As with many technological innovations, personal data protection is always a concern.

In 2017 the UK’s data watchdog ruled that a DeepMind NHS partnership was illegal.

This decision was made because patients were not made fully aware of how their data was being used.

However, Deepmind and the Imperial Health Care Trust have sought to allay these concerns. Both have confirmed that the data cannot be accessed or used for any purposes other than those related to the app.

DeepMind Solutions to Prevent Sight Loss

DeepMind is not only interested in improving patient care and streamlining treatment times and processes.

They have also identified a number of other areas where their systems can make an impact.

One such area of potential impact is in the treatment of eye disease.

It’s estimated that 1 in 11 of the world’s adult population have diabetes.

A diabetic is 25 times more likely to suffer from sight problems, such as blindness or sight loss, than non-diabetics.

However, if eye disease is identified and treated early, 98% of severe sight loss cases can be either reduced or prevented.

Most people who lose their sight will do so because of Age-related Macular Degeneration.

AMD causes almost 200 people to lose their sight in the UK every day.

Early detection and treatment, using AI and deep learning powered systems, has the potential to save many peoples sight.

To this end, DeepMind has teamed up with the Moorfields Eye Hospital.

Finding a Solution to Sight Loss

Eye conditions have traditionally been diagnosed by taking digital scans of the back of the eye.

This method has also dictated treatment plans.

However, these scans can be highly complex, meaning that even skilled professionals take time to correctly analyse them.

Conventional computer analysis tools have also, so far, been unable to fully read these scans.

However, DeepMind and their approach hope to improve the systems that read these scans.

Not only presenting a more complete picture but also speeding up analysis rates.

This, in turn, will speed up diagnosis and treatment times.

A faster diagnosis means that patients will be treated quicker, potentially saving some if not all of their sight.

DeepMind is preparing to launch its working prototype, that is capable of detecting many complex eye conditions in real-time.

DeepMind aids in Navigating Unfamiliar Situations Without Maps

A new city can be difficult to navigate, especially if you are in a foreign country.

Until we learn visual markers, which help us get a sense of our bearings, we are forced to rely on maps or digital solutions.

However, even smartphone apps can prove unreliable.

They can be outdated or simply fail due to low battery or connectivity issues.

Some, less well-known locations, may not even be mapped at all.

This latter issue can be especially true if you are in a remote destination.

Developers at DeepMind may have the solution.

A paper published in 2019, Cross-View Policy Learning for Street Navigation, revealed how the company were approaching the issue.

Transforming Mapping Capabilities

DeepMind’s work is inspired by observing how quickly humans can learn to navigate a new location by simply reading a map.

Consulting maps, as well as picking up visual clues, helps the brain to absorb and learn its new situation.

With this in mind, developers are creating neural network agents that can read images observed from a location point.

Once processed, the system can then predict the next correct action to take.

By training the network, with a deep reinforcement learning process, it learns to adapt to its new environment.

Similar learning systems are also teaching AI, via deep learning to navigate 3D mazes as well as conducting other unsupervised auxiliary tasks.

However, unlike these studies, DeepMind’s mapping approach uses city-scale real-world data.

This includes footpaths, subways, tunnels, complicated intersections and other difficult topological features.

In their Cross-View Policy paper, the company described their AI system as being trained with a ground view corpus.

In addition, their system is able to target parts of a city using top-down visual information.

By combining approaches, and giving the system as much information as possible, a far better mapping system can be created.

This system gives the user a more detailed picture of their surroundings than the traditional GPS generalisation approach.

Using Information to Navigate a City

As much information, such as detailed regional aerial maps, geographical coordinates, street-level images, is gathered, matched up and collated.

Once correctly collated the system is trained.

Developers devised a system consisting of three modules.

A convolutional module, responsible for visual perception, a long short term memory module that identified and recorded location-specific features.

The final module was a policy recurrent neural module.

This produced a distribution over actions.

DeepMinds systems, trained on topological information of New York and Pittsburgh were first rolled out in StreetAir.

This is a multi-view outdoor street environment based on the StreetLearn application.

StreetLearn is an interactive collection of panoramic street-view photographs.

These images are taken from Google Maps and Street View applications.

Aerial images were arranged to correspond with ground view images of the same location.

Once the system is trained it is able to navigate Street View graphs of panoramic images.

Once the Ai reached within 200 meters of the target the system was rewarded, reinforcing good behaviour and practices.

This was intended to encourage quick and accurate routes to be selected.

Applications in Autonomous Vehicles

In testing systems navigating with both aerial and street-level images proved more accurate than systems using only ground-level data.

This reinforced DeepMind’s belief that analysing a range of information, from different angles allows for more accurate, speedier route navigation.

In the published paper the research team concluded that their “results suggest that the proposed method transfers agents to unseen regions with higher zero-shot rewards and better overall performance compared to single view agents.”

Eventually, it will be able to adapt quickly and seamlessly to new city locations, offering reliable and accurate mapping information.

Seamlessly interchangeable, if proved successful this system will not only be useful for pedestrians.

It may also prove a key application in the development of driverless cars.

Currently, DeepMind is training its systems on learning the streets of Paris, London, and New York.

A massive task, it is made slightly easier by breaking each city into regions.

Each region can be learnt, meaning an autonomous vehicle can navigate around Harlem or Greenwich Village.

The next step will be teaching the system to navigate between separate regions.

Allowing for self-driving cars to safely navigate an entire city.

READ MORE – World’s Top 33 Companies Working on Self Driving Cars

Image Detection and Realistic Generation

As well as the conventional applications of AI, DeepMind looks to implement its solutions in a range of novel fields.

While these applications may be unusual they are certainly proving to be effective.

One such application is in the tracking of herds of animals on the Serengeti.

The company’s ecological interests have seen its science and research team developing an AI system to study animal behaviour.

Much of this research has been done on animals at the Serengeti National Park in Tanzania.

Before the involvement of DeepMind, hundreds of motion-activated field camera captured the movements of animals throughout the park.

Since these cameras were placed in various locations by the Serengeti Lion Research Program they have recorded hundreds of images.

These images have allowed conservationists to track animal movement, recording the distribution and demography of the park’s inhabitants.

However, this information is only useful once it has been processed and labelled by scientists or volunteers.

Even the most simple tasks, such as identifying the animal in the image, must be done by a human operative.

This can take hours, even with the help of volunteers on the internet throughout the world.

It can take almost a year from an image being taken for it to be properly labeled and be useful.

Speeding up Image Annotation with Deepmind

DeepMind has trained an AI model with the aim of streamlining and speeding up this process.

Using the Snapshot Serengeti dataset their model is able to automatically detect and identify animals.

It is also able to count the number of animals in an image.

Capable of identifying almost all of the species resident in the park, DeepMind believes that their system is as reliable as any volunteer annotator.

They also believe that their model can speed up the process of analyzing the images by about 9 months.

The company hopes that the system will be able to be used in the field, as it is capable of operating on “modest” hardware with intermittent or unstable internet access.

Image Generation Software

In the field of image generation, a system that is capable of creating realistic, or convincing images is the ultimate aim.

It is with this goal in mind that DeepMind has partnered with Heriot-Watt University.

Their paper, “Large Scale GAN Training for High Fidelity Natural Image Synthesis”, has revealed the strides made in this field.

The two are close to achieving realistic synthetic image generation.

Currently, the systems developed by the team are able to accurately approximate images of food, pets and landscapes.

These images are being created with an increasingly impressive level of accuracy.

Some of the best synthetic images have been described as almost indistinguishable a real image taken by a camera.

The paper also reveals the potential for future applications of this technology.

How Are These Images Generated?

This has been achieved by using large, optimized GANs, or generative adversarial networks.

In simple terms, these are two-part neural networks consisting of generators.

These are capable of producing samples and discriminators.

This allows the system to distinguish between generated images and real images.

By training the system on a large image data set with around 158 million parameters it is able to identify intricate details.

Increasing the number of channels available also helped to improve analysis.

This allows for the system to analyse an image in complex detail.

Meanwhile “truncation” encouraged the system to continually create more accurate images.

Once the system was operating satisfactorily the developers turned their attention to ensuring that the design was scalable.

This was done by accessing JFT-300M, a dataset of over 300 million real images.

It took developers two days to train the system, dubbed BigGans, on this larger data set using Google’s Tensor Processing Units.

AI accelerators, these application-specific circuits have been developed with the express purpose of enabling and enhancing machine learning capabilities.

Once the training was complete the system achieved an Inception Score of 166.3 and a Frechet Inception Distance score of 9.6.

These two measurements gauge the accuracy and realism of image generation models.

These scores were significant improvements on the previous best results, 52.52 on the IS scale and 18.65 on FID.

MORE – Data Science – 8 Powerful Applications

A Concern for Law Makers

These realistic generated images are sometimes known by the name Deepfakes, a portmanteau of deep learning and fake.

Deepfakes are increasingly coming to the attention of lawmakers.

In the United States, Congress contacted Dan Coates, Director of National Intelligence, requesting a report on the potential impact of deepfakes.

Congress was concerned that the technology could negatively harm both democracy and national security.

As well as the legal profession, developers are also intrigued by this problem.

Startups such as Truepic are developing systems that can automatically detect deepfakes or manipulated videos and images.

DeepMind Continues to Drive Innovation

Since its acquisition by Google, DeepMind has made significant strides in AI technology and development.

These applications have been implemented in a wide range of fields, from health care to conservation and energy conservation.

While some of these innovations, such a 3D mapping, are still in development they have the possibility to make a huge impact.

This may seem like a perfect partnership, however, there have long been reported tensions between the two organisations.

Much of this stems from Googles desire to commercialise DeepMinds many projects and systems.

This sits against DeepMind’s self-stated desire to become a home for long-sighted research.

However, despite the disagreements, the two continue to work successfully together.

This is largely because they share the belief that AI can help to discover new information.

This can then be used to find solutions to some of the world’s starkest problems.

Images: Flickr Unsplash Pixabay Wiki & Others