Mark Zuckerberg, Facebook’s CEO, recently triggered a wave of laughter, especially among Twitter users, after he said the word nipple during the company’s earnings call. When queried about inappropriate content on the platform, he replied that it was much easier to develop an AI system that can identify a nipple than it is to detect content that is considered as hate speech.

The comment made by the CEO of the largest social network in the world elicited a trail of jokes on social media. Nevertheless, Mark Zuckerberg was not joking in any way. Abusive content on Facebook comes in varying forms including racial slurs, drug listings, scams, and nudity just to mention a few.

Worst case scenario, these cases cannot be dealt with using one similar technique. Zuckerberg never fails to mention two important things when talking about taking down abusive content on Facebook. They include:

1. Facebook is putting its money into the development of artificial intelligence tools in a bid to proactively identify abusive posts and get rid of them.

2. The company intends to recruit 20,000 content moderators by the close of this year to look for and review objectionable content.

For the first time, Facebook disclosed how it utilizes its artificial intelligence (AI) systems for content moderation. Automated artificial intelligence tools assist in seven fields including graphic violence, nudity, hate speech, suicide prevention, fake accounts, terrorist content, and spam.

Graphic violence and nudity are some of the problematic posts that are spotted by computer vision. The software is trained to detect such content due to certain aspects contained in the image. Facebook at times takes down the graphic content while other times, it puts it behind a warning screen.

Hate speech is difficult to monitor, especially with artificial intelligence since there are usually different objectives behind such language. It may be self-referential or sarcastic or it may even be intended to create awareness about hate speech.

It is, even more, harder for AI to recognize hate speech in languages that are less popular because it has few examples to rely on.

According to Guy Rosen, Facebook’s VP of product management, the company has a lot to do. He added that the company’s goal is to get to the abusive content before anyone using the platform views it.

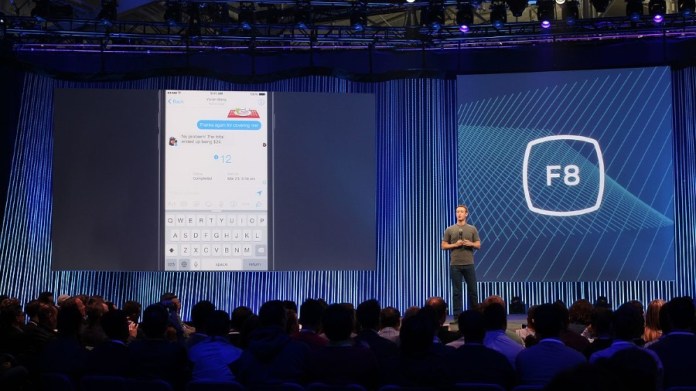

Facebook opened up about its artificial intelligence (AI) tools after Mark Zuckerberg and his team were grilled by the US Congress regarding the recent Cambridge Analytica scandal.

The digital consultancy was said to have gained access to personal data belonging to about 87 million users registered to the platform and went ahead to use it without their authorization. The issues sparked questions about the platform’s policies including the company’s responsibility to its over 2.2 billion users.

In an effort to remain transparent about its operations, Facebook also recently disclosed internal guidelines used by its content moderators in assessing and handling objectionable content.

In spite of the thousands of moderators used by the platform, such material appears to still be finding its way on Facebook. Nevertheless, Mark Zuckerberg said that hiring tens of thousands of moderators and creating AI marks a significant step towards solving such problems.

Source CNET