From an architectural point of view, artificially intelligent systems are diverse. However, they all have one component in common, datasets. The only problem is that huge sample sizes are mostly a corollary of accuracy while some datasets are harder to get than others.

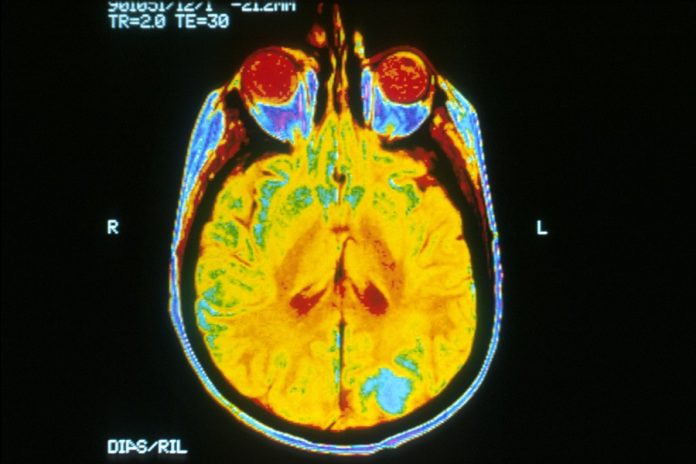

Nevertheless, researchers from the Mayo Clinic, Nvidia as well as the MGH and BWH Center for Clinical Data Science are convinced that they have found a remedy for this issue, a neural network that produces training data by itself (synthetic 3D magnetic resonance images of brains with cancerous tumors).

The researchers described the neural network in a paper titled “Medical Image Synthesis for Data Augmentation and Anonymization using Generative Adversarial Networks,” which was recently presented at the Medical Image Computing & Computer-Assisted Intervention conference held in Granada, Spain.

“We show that for the first time we can generate brain images that can be used to train neural networks,” said Hu Chang, a lead author on the paper and a senior research scientist at Nvidia.

The artificial intelligence(AI) system was not only created using the PyTorch deep learning framework from Facebook but also trained on an Nvidia DGX platform.

Aside from that, it utilizes a general adversarial network(GAN), which entails a two-part neural network boasting a generator that yields samples and a discriminator that tries to differentiate between real-world samples and generated samples. The aim of GAN is to develop compelling MRIs, particularly of abnormal brains.

The team of researchers sourced two publicly available datasets including the Multimodel Brain Tumor Image Segmentation Benchmark (BRATS) and the Alzheimer’s Disease Neuroimaging Initiative (ADNI).

The purpose of the two datasets was not only to train the GNA but also to use 20% of BRATS’ 264 studies, primarily for testing performance. Even though computer and memory constraints forced them to minimize the scan from a resolution of 256 x 256 x 108 to 128 x 128 x 54, they utilized the original images for comparison.

READ MORE – Top 10 Ways Artificial Intelligence is Impacting Healthcare

After being fed images from ADNI, the generator learned how to produce synthetic brain scans, which were complete with cerebral spinal fluid, grey matter-white matter. Then, when it was allowed to feed on BRATS dataset, it produced complete segmentations with tumors.

On the other hand, the GAN interpreted the scan, an exercise that would take a team of human specialists hours to complete. Also, since it treated the tumor and brain anatomy as different labels, it enabled researchers to change the tumor ’s location and size or even “transplant “ to a healthy brain’s scans.

“Conditional GANs are perfectly suited for this. [It can] remove patients’ privacy concerns [because] the generated images are anonymous,” Chang said.

So how did it perform? Well, when the team used a combination of synthetic brain scans and real brain scans to train machine learning models generated by the GAN, it attained an accuracy level of 80%, which is 14% better compared to a model that has been trained on actual data only.

According to Chang, future research will explore the use of larger datasets and high-resolution training images across different patient populations. He added that enhanced model versions may reduce the boundaries surrounding tumors to prevent them from appearing “superimposed.”

Source Venturebeat